Early Literacy Screening and Data Stories

- Brent Conway

- Oct 27, 2025

- 12 min read

Educators have been testing students for as long as they have been teaching them. It’s hard to separate testing and teaching. If we teach something, we want to know how well a student has learned it. But not all tests are the same. When it comes to literacy, specifically, which tests and assessments you use can differ based on the purpose and use of the information. We certainly don’t want to just test students for the sake of doing it. Pentucket has developed a pretty systematic way of using different types of assessments to guide what we teach to all students, to help know which students may need additional support, and to inform what skills a student may need to have targeted.

Purpose of screening

In the fall of 2024, Massachusetts voters approved a ballot question that removed the MCAS (the state assessment in Literacy, Math and Science) from being a graduation requirement. Students are still required to take the MCAS by both state and federal regulations, but it is no longer a statewide requirement that they pass it in 10th grade in order to graduate. While this change may have been encouraged by folks who felt there was too much emphasis on testing, when it comes to literacy, having the right information at the right time from valid and reliable assessments is often the difference between a lifetime of reading difficulties or one in which a student uses reading to gain access to so many other things.

The MCAS was never meant to be the informative type of assessment, mainly because it’s a broad measure of reading, the results come to school the year following testing and we don’t even begin to test students until the end of 3rd grade. However, in MA the early literacy screening law took effect on July 1, 2023, which required all public school districts to assess students in kindergarten through third grade for early literacy skills at least twice a year using a Department of Elementary and Secondary Education (DESE) approved screening tool. Pentucket had been using one of those approved early literacy screeners for a number of years prior. For Pentucket, the use of DIBELS 8 gave our educators a consistent tool that was both valid and reliable. A valid tool assesses what it claims to assess. A reliable tool gets consistent results regardless of the circumstances, so those using the results can trust that they are likely an accurate depiction of each child’s skills. Having this information and building a system to make sure it is actionable, is possibly the most critical thing we have as a district for our youngest readers. Waiting for data once students become 4th graders has proven to be far too late. While this has been known for many years, MA participated in a multi-year study with West Ed that looked at early screening results from thousands of students in districts across the state.

Pentucket participated in the study that included the graph above from the West Ed Study in MA. While this data included students being screened in K-3 with multiple different screeners, all of the screeners met the criteria for being valid and reliable early literacy screeners. What is not controlled here is what each school actually did to improve outcomes of students, but safe to say they did something. The graph clearly shows the percentage of students who were at risk for reading failure at the start of the year declined by the middle of the year and even more so at the end of the year. But that rate of change was most noticeable in K and Gr 1. It was certainly evident in grade 2, as well but by the time students reached 3rd grade, if they were not on grade level with literacy skills to start the year, very few of them changed their risk level at the end of the year.

The screeners are not complete assessments and they are not diagnostic in the sense that a teacher would know precisely where the skill breakdown is. But a good screener, like the DIBELS 8, uses multiple quick measures to look at a student's ability to do certain early literacy skills automatically. That is to say - quickly and accurately - which has proven to be a strong predictor of future reading proficiency. Many of the subtests are done 1 on 1 and give the teacher a chance to see how the student performs each skill. While letter naming is not reading itself, it is correlated with becoming a strong reader. Just as reading nonsense words is not reading for meaning, it demonstrates a student's ability to quickly use learned phonics patterns in words that would not be memorized by sight because they are not real words. When students struggle with some of these foundational skills, it is a red flag that the student needs additional and targeted instruction.

Different from Diagnostic or Summative Assessments

Diagnostic assessments are a critical tool - but really just for students who are demonstrating difficulty with a particular skill even after high-quality instruction. Often the screener provides enough information for us to use our evidence and research based curriculum to address needs that show up. By monitoring a student’s progress with the targeted instruction, we know if the additional and more intensive instruction is effective for the student. If it’s not, we can increase the time or even the intensity of the instruction. However, sometimes we simply don’t get the response we would expect for a student and that is often when a diagnostic tool is needed so a practitioner or team of educators can hone in on where the breakdown may be coming from. Some diagnostic tools might be part of a tiered intervention model to help a team, especially when it is a young student who is exhibiting difficulty. However, many diagnostic tools are part of the special education evaluation process and they can be used in conjunction with teacher observation and curriculum based measures to paint a picture of a student’s skills and needs. The team can then make even more informed decisions about an instructional approach that would be designed to meet those needs.

When this process is done well, any use of diagnostic assessments can safely assume that the student was already provided with an evidence based method and had additional targeted support. Curriculum based measures are meant to measure a student’s progress in a fairly short period of time, essentially measuring how successful a particular instructional approach was at improving a student’s performance. Simply adding 30 minutes per day of targeted instruction - on top of what was already well designed instruction - should “show up” in a curriculum based measure. This is subtly different from a Summative Assessment, which is also measuring how well a student learned content or skills over a period of time, but often a longer period of time. This makes it harder to measure a specific aspect that was responsible for any change. And yet, summative assessments are also a valuable tool for seeing the big picture of improvement as opposed to subtle skill improvements that may lead to longer lasting results.

A clear example of this would be if we taught 1st grade students to decode and spell vowel teams, we would likely start with a specific sound such as “long a” and teach the vowel teams and different spelling patterns /ai/ as in chain and /ay/ as in gray. If we wanted to see how kids did on that skill, we would use a curriculum based measure to see how well they performed after a fairly short period of instruction, likely just a few days. However, vowel teams are much more than 1 sound, so over the span of a few weeks we would also be teaching the vowel teams that make long e, such as /ea/ with meat and /ee/ with feet, along with /ie/ in pie and also long o with /oa/ in boat and /oe/ in toe. The entire unit might have a summative assessment to look at the overall skill development.

Predictive in design - Risk likelihood

Our goal by using valid and reliable screeners is to NOT need diagnostic tools for the vast majority of our students. The screener alone, especially in K-2, provides educators with actionable information when reviewed with what the curriculum will cover and how they can instruct students. The point is to use the information to change a student’s trajectory. A screener like DIBELS 8 is actually predicting a student’s outcome for reading proficiency at the end of the year - IF - and this is a BIG IF… all we do is the normal instruction. So what the screener is doing is telling us when a student has a score on a key measure that is below the benchmark, they have a reduced likelihood of being proficient because that particular skill, even when measured in isolation, has a strong correlation and in some cases even causation - to becoming a proficient reader.

The MA law on early literacy screening states if a student's results are significantly below benchmark, the school must provide targeted instruction, inform the parent or guardian within 30 school days of the results, and outline what the school’s response will be with an offer to have a follow-up discussion. The goal of both the law and the district is quite literally to reduce the number of students who are measured to be “at-risk” for reading failure. That is only done by having an actionable plan that is framed around both evidence based curriculum and instruction, combined with evidence based strategies to improve outcomes - such as additional targeted instruction.

Pentucket started this work before the early literacy regulations were even passed and we have done just that - dramatically reduced the percentage of students who are at-risk for reading failure. In the 18-19 school year 58% of our students were on track to being proficient readers in K-3 but that number has now exceeded 80% at the end of the 24-25 school year. The key here is we are not waiting until the first MCAS score from the end of 3rd grade to see which students are at-risk. We are starting this targeted plan the moment students walk in the door in Kindergarten.

Dyslexia

The early literacy screening work, and in particular the new(ish) MA regulations about it, are often called the “dyslexia screening”. It is fair to say that advocates for students who struggle with dyslexia worked hard to ensure these screening regulations went into place. The initial response when young students demonstrate struggles with reading may not vary that much whether or not it is technically dyslexia or some other cause of the difficulty. However, those with dyslexia, as we have learned, do not magically develop this learning disability in 3rd or 4th grade and the signs were likely present long before, hence the need for such an early literacy screening process.

Dr. Nadine Gaab, a professor and researcher at Harvard, has done some groundbreaking research that focused on young kids even before they entered school. In this 2023 podcast recording from the Harvard Graduate School of Education, Dr. Gaab discussed her work on how brain imaging has changed the way we view the identification of children who have early markers that will impact their ability to learn to read.

“So is it that they all kind of start the first day of kindergarten with a clean slate when it comes to brain development, and then the brain changes because they're struggling on a daily basis? Or do these brain alterations predate the onset of formal reading instruction? And what we could show is that some of these brain alterations are already there in infancy, and toddlerhood, and preschool.

So what we can conclude from this is that some children step into their first day of kindergarten with a less optimal brain for learning to read. So you want to find them right then, right? And that has tremendous implications for policy. You don't want to wait and let them fail if you already can determine who will struggle most likely and who will not.” - Dr. Nadine Gaab, Harvard University

What we know about dyslexia is that like most neurologically based learning differences, it occurs on a spectrum and can have variances on what aspects of reading it impacts, especially if it occurs with any other sort of learning or health related disability. Some in the field estimate up to 20% of students have dyslexia, whereas other estimates place the figure between 5 and 10%. Regardless of the frequency of occurrence, around 40 percent of 4th graders are working below the NAEP Basic level in reading so taking a measured and deliberate approach to working on these issues starting in Kindergarten, just as Pentucket has, is a major priority for the district. Having a disability should not mean a lifetime of low growth and low expectations. Special Education should not be seen as a "destination" with low expectations upon arrival. In fact - intensity of instruction is often the lever that is needed - be it with or without special education support.

Data Meetings

As part of the system that uses early literacy screening data well, it is important that strong practices exist on managing and leveraging the data that is collected. As we see in the earlier graphs, time is of the essence. For 8 years now, Pentucket has engaged in a Data Meeting structure that has evolved as we became skilled at understanding and using the data. The concept of a data meeting is far from new, but when it comes to using them for early literacy data, it often needs to be both big picture and granular. This way we can understand the impact of our overall efforts with curriculum and instruction - and each teacher and team of support staff can better understand which students need which level of support and instruction.

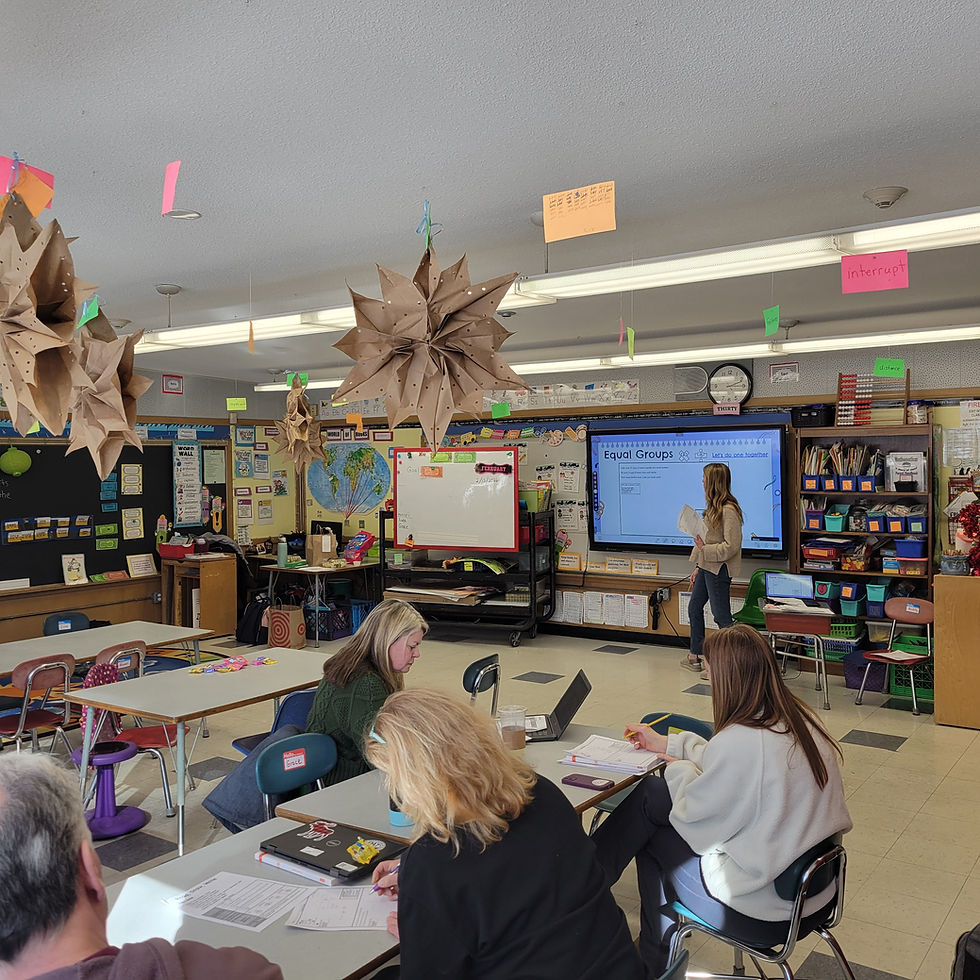

When all teachers at a grade level team join forces with district specialists and building level leadership, a collective efficacy takes shape. We can both celebrate success as we see students respond to the intentionally designed instruction and we can triangulate data on particular skills and develop plans to target the needs of students who are behind. Our structure relies on a predictive literacy screener and then meeting right after they are completed. Each grade level team in each building meets for 45 minutes in the fall and the spring, facilitated by our Literacy Coordinator, Jen Hogan. But in the winter, the meetings are closer to 90 minutes. It takes nearly 2 weeks to complete these meetings in late January, but they have proven to be the driver of change for both teaching and for learning. It is all hands on deck for these meetings.

Grade Level - All Personnel

Progress Monitoring Meetings

Source: - The HILL

In between these three meetings, smaller groups of staff meet halfway through, which ends up being about 6-8 weeks after the large grade level meetings. This serves as a good check point for those students who are getting more attentive instruction. Progress Monitoring data and curriculum based measures are used to better evaluate the effectiveness of the plan that was enacted at the grade level meeting. These occur 2x per year and may result in tweaks or smaller shifts for individual students who may have exhibited great growth, or for those experiencing greater difficulties.

Annual Growth for All - Catch Up growth for those who are behind

The data approach we use is largely based on the concept that we want all students to make annual growth that is typical, but for those who start the year behind their peers, they need to make growth at a faster rate. That doesn’t mean that all students will be meeting grade level benchmarks each year, but when we design a system that prioritizes both annual growth for all and catch up growth for those who are behind, we are working to change the trajectory of the students who are behind. This approach was written about nearly 25 years ago by the leaders of the Kennewick, Washington School District in the book Annual Growth for All Students, Catch Up Growth for Those Who Are Behind. They used a data driven approach to systems level change that resulted in 90% of their 4th graders becoming proficient readers.

To explain this mantra, if a 2nd grader starts the year a half a year behind in reading and has a great year making a full year’s worth of growth matching their peers level of growth - they are still a half a year behind their peers at the end of the year. However, if we know what particular areas that student is having difficulty with because our screeners can provide us that information, and our teachers have been trained to deliver evidence based instruction, we can change that. Furthermore, by giving teachers the curriculum and resources they need and leveraging a schedule designed to give kids more instruction when they need it - we can in fact improve that student’s trajectory. While that student may not be on grade level at the end of the year - we can - and have been able to get them closer to that mark. That is catch up growth. For those students who are behind in reading, it can be the most impactful outcome we can influence.

Data Stories

Our data meetings began 8 years ago with administrators - myself mainly - reviewing the data with teachers, sorting through it on a color coded spreadsheet and telling the teachers how to group students for certain intervention and targeted instruction. It was immediately effective at influencing the amount of targeted instruction certain students received. Those who needed more, were getting more. However, the changes needed to really influence the day to day instruction, and with more nuanced and skilled teaching would only come when the teachers themselves knew and understood the data so they could make more frequent and informed decisions. This was true for instruction to their entire class and even more true for any student who was behind.

In more recent years, we shifted to a Data Story model, where each teacher spends time on their own data before the data meeting. Using a set of guided questions, they come to the data meetings with a story to tell about their own classroom data. That story includes an examination of what has worked, what needs improvement, and which students may need varying levels of support. It also includes their own plan of how to do it by knowing and leveraging all the system supports in place to make it happen. Jen Hogan’s purposeful evolution to this model has been transformative.

“Instead of coming to data meetings as passive recipients of information, teachers are coming to the meetings with reflection, ideas, and a plan for action. This has allowed us to have conversations about the data and students’ needs on a level we weren’t reaching before and with an understanding that can only be gained from spending time in the data.” - Jen Hogan, Pentucket Literacy Coordinator

The teachers are owning the data and seeing it as a tool for them, their class and as a basis for ongoing discussions with colleagues about improving the learning for students. Not only did it correlate with our highest ever benchmark assessment scores in 2024-25, it resulted in educator self-reflection that is seen in the classroom adjustments to practice as they connected their work to research and evidence.

Dr. Brent Conway

Assistant Superintendent

Pentucket Regional School District

@drconwaypentucket

Comments